Dive deep into the world of Generative AI and its potential for enterprise innovation. Learn the critical distinctions between Public and Private Large Language Models (LLMs) and how they can impact your organization’s AI initiatives.

Large language models (LLMs) continue to command a blazing bright spotlight, as the debut of ChatGPT captured the world’s imagination and made generative AI the most widely discussed technology in recent memory (apologies, metaverse). ChatGPT catapulted public LLMs onto the stage, and its iterations continue to rev up excitement—and more than a little apprehension—about the possibilities of generating content, code, and more with little more than a few prompts.

While individuals and smaller businesses consider how to brace for, and benefit from, the ubiquitous disruption that generative AI and LLMs promise, enterprises have concerns and a crucial decision to make all their own. Should enterprises opt to leverage a public LLM such as ChatGPT, or their own private one?

Public vs private training data

ChatGPT is a public LLM, trained on vast troves of publicly-available online data. By processing vast quantities of data sourced from far and wide, public LLMs offer mostly accurate—and frequently impressive—results for just about any query or content creation task a user puts to it. Those results are also constantly improving via machine learning processes. Even so, pulling source data from the wild internet means that public LLM results can sometimes be wildly off base, and dangerously so. The potential for generative AI “hallucinations” where the technology simply says things that aren’t true requires users to be savvy. Enterprises, in particular, need to recognize that leveraging public LLMs could lead employees astray, resulting in severe operational issues or even legal consequences.

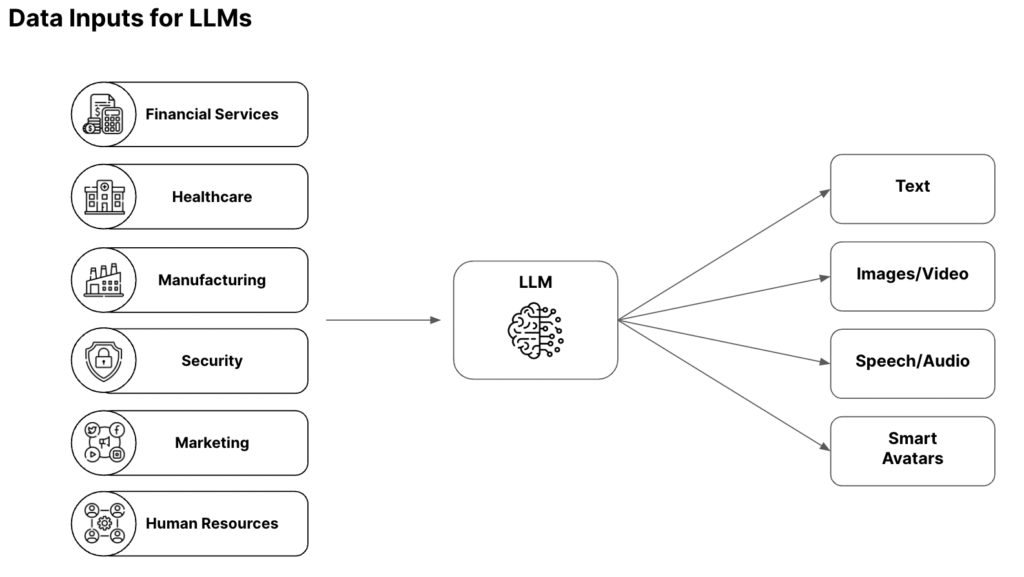

Image 1 Private LLM at inputs can include customer data, industry data, log data, product data, employee data, etc.

As a contrasting option, enterprises can create private LLMs that they own themselves and train on their own private data. The resulting generative AI applications offer less breadth, but a greater depth and accuracy of specific knowledge speaking to the enterprise’s particular areas of expertise.

Enterprises have data concerns, and must create strategic value

For many enterprises, unique data is an invaluable currency that sets them apart. Enterprises are, therefore, extremely (and rightfully) concerned about the risk that their own employees could expose sensitive corporate or customer data by submitting that data to ChatGPT or another public LLM.

This concern is based in reality: hackers now focus on exposing ChatGPT login credentials as one of their most popular targets. A hacked account can yield the entire history of an employee’s conversations with the generative AI application, including any trade secrets or personal customer data used in queries. Even in the absence of hacking, questions posed to public LLMs are harnessed in their iterative training, potentially resulting in future direct data exposure to anyone who asks. This is why companies including Google, Amazon, and Apple are now restricting employee access to ChatGPT and building out strict governance rules, in efforts to avoid the ire of regulators as well as customers themselves.

Strategically, public LLMs confront enterprises with another challenge: how do you build a unique and valuable IP on top of the same public tool and level playing field as everyone else? The answer is that it’s very difficult. That’s another reason why turning to private LLMs and enterprise-grade solutions is a strategic focus for an increasing number of organizations.

Enterprise-grade private LLM solutions arrive to meet tremendous demand

For enterprises conscious of the opportunity to leverage their own data in a private LLM tailored to the use cases and customer experiences at the heart of their business, a market of supportive enterprise-grade vendor tools is quickly emerging. For example, IBM’s Watson, one of the first big names in AI and in the public imagination since the days of its Jeopardy victory, has now evolved into the private LLM development platform watsonx. Such solutions will need to draw the line between “public baseline general shared knowledge” and “enterprise-client specific knowledge,” and where they set that distinction will be important. That said, some very powerful capabilities should come to market with the arrival of these solutions.

Image 2 The evolution to LLMs

The decision for enterprises to try to govern the usage of public LLMs or build their own private LLMs will only continue to loom larger. Enterprises ready to build private LLMs—and harness AI engines specifically tuned to their own core reference data—will likely be creating a foundation they’ll continue to rely on well into the future.

Visit AITechPark for cutting-edge Tech Trends around AI, ML, Cybersecurity, along with AITech News, and timely updates from industry professionals!