Discover how leading companies are using AI for their vested interests in shaping ethical guidelines.

3. Understanding the AI Life Cycle From an Ethical Perspective

3.1. Identify the Ethical Guidelines

3.3. Data Collection and Pre-Processing Practice

3.4. Model Development and Training

3.5. Regular Auditing and Monitoring

4. Fostering a Sustainable Future with AI Ethics

Introduction

Artificial intelligence (AI) has been a game changer in the business landscape, as this technology can analyze massive amounts of data, make accurate predictions, and automate the business process.

However, AI and ethics problems have been in the picture for the past few years and are gradually increasing as AI becomes more pervasive. Therefore, the need of the hour is for chief information officers (CIOs) to be more vigilant and cognizant of ethical issues and find ways to eliminate or reduce bias.

Before proceeding further, let us understand the source challenge of AI. It has been witnessed that the data sets that AI algorithms consume to make informed decisions are considered to be biased around race and gender when applied to the healthcare industry, or the BFSI industry. Therefore, the CIOs and their teams need to focus on the data inputs, ensuring that the data sets are accurate, free from bias, and fair for all.

Thus, to make sure that the data IT professionals use and implement in the software meet all the requirements to build trustworthy systems and adopt a process-driven approach to ensure non-bais AI systems

This article aims to provide an overview of AI ethics, the impact of AI on CIOs, and their role in the business landscape.

1. Redefining AI Ethics

With the emergence of big data, companies are increasingly focused on driving automation and data-driven decision-making across their departments. The intention of using these technologies is to improve business outcomes, but if not implemented with set rules and regulations, the companies might experience unforeseen consequences in their AI applications due to poor research design or biased datasets.

Leading IT companies and other industries that are using AI have vested interests in shaping ethical guidelines, as these companies have themselves started to explore the use of AI to uphold ethical standards.

This lack of diligence in AI and AI’s subsets can result in legal, regulatory, and reputational damages, as well as cost penetrations.

On the other hand, the popularity of natural language processing (NLP), machine learning (ML), and deep learning applications have brought challenges that lead to dangers for implementing them in organizations; thus, CIOs need to review the ethical considerations.

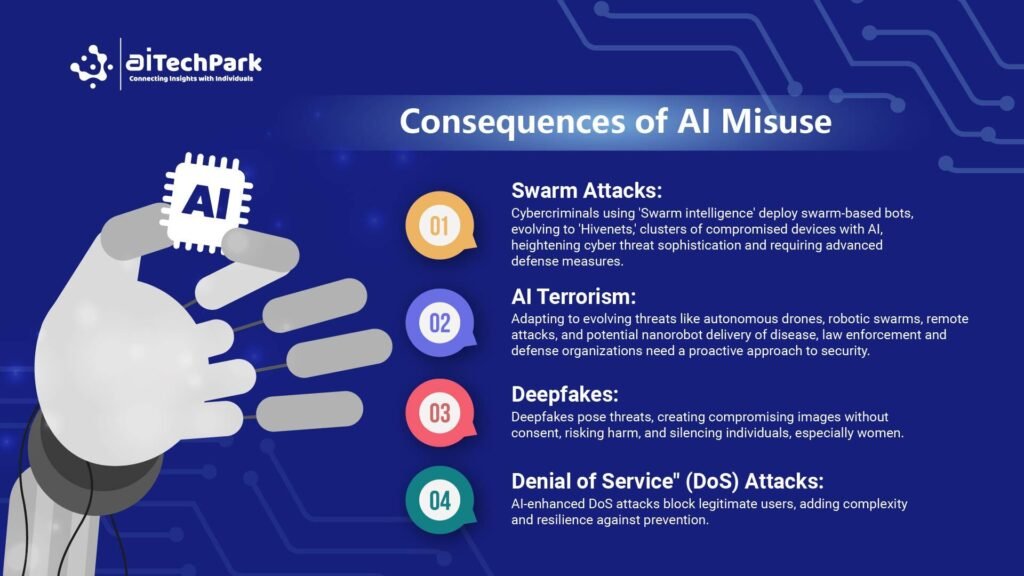

2. Consequences of AI Misuse

As mentioned above, the challenges of AI pose risks for companies’ privacy, transparency, and accountability, which infers accurate decision-making. To eliminate these issues, CIOs can develop a robust AI lifecycle from an ethical perspective.

3. Understanding the AI Life Cycle From an Ethical Perspective

3.1. Identify the Ethical Guidelines

The foundation of ethical AI responsibility is to develop a robust AI lifecycle. CIOs can establish ethical guidelines that merge with the internal standards applicable to developing AI systems and further ensure legal compliance from the outset. AI professionals and companies misidentify the applicable laws, regulations, and on-duty standards that guide the development process.

3.2. Conducting Assessments

Before commencing any AI development, companies should conduct a thorough assessment to identify biases, potential risks, and ethical implications associated with developing AI systems. IT professionals should actively participate in evaluating how AI systems can impact individuals’ autonomy, fairness, privacy, and transparency, while also keeping in mind human rights laws. The assessments result in a combined guide to strategically develop an AI lifecycle and a guide to mitigate AI challenges.

3.3. Data Collection and Pre-Processing Practice

To develop responsible and ethical AI, AI developers and CIOs must carefully check the data collection practices and ensure that the data is representative, unbiased, and diverse with minimal risk and no discriminatory outcomes. The preprocessing steps should focus on identifying and eliminating the biases that can be found while feeding the data into the system to ensure fairness when AI is making decisions.

3.4. Model Development and Training

During the AI model development phase, ethical considerations should be integrated into the AI system’s algorithms and architecture. AI developers and CIOs “must prioritize explanation,” identifying and addressing biases, and transparency. Further training data and continuous monitoring are needed to prevent the reinforcement of bias patterns.

3.5. Regular Auditing and Monitoring

After deploying responsible and ethical AI into the system, IT professionals should regularly audit and monitor to assess their ongoing performance, address potential ethical concerns, and identify biases. AI developers should take real-world feedback and user experience into account to continuously improve the AI system in terms of performance and transparency.

Apart from developing ethically responsible AI systems, IT professionals should educate and empower users about the limitations, capabilities, and potential threats of AI systems when they interact with them.

4. Fostering a Sustainable Future with AI Ethics

Meanwhile, AI adoption is radically changing the business environment. It is witnessed that with the heightened AI usage come heightened risks, which we have mentioned above. These concerns, even if implemented unintentionally, can be obscured behind human rationales. Thus, to detect and correct biases in AI, educational and preventive guidelines are the need of the hour, which will help organizations and CIOs achieve greater outcomes.

However, on the contrary, in a recent global survey, more than half of the executives in IT believe that companies that have implemented AI ethics can make ethical decisions.

Leveraging AI’s ethical considerations is designed and developed so that AI systems promote responsible AI. A notable example is Google’s ethical AI principle, which is a guide for AI developers to develop and deploy AI technologies that ensure they design an AI system with ethical considerations in mind.

Thus, we can conclude by saying that to mitigate unintended results and other shortcomings, CIOs and IT professionals should implement the right ethical framework.

Visit AITechPark for cutting-edge Tech Trends around AI, ML, Cybersecurity, along with AITech News, and timely updates from industry professionals!